Select AI with Retrieval Augmented Generation (RAG)

Select AI with RAG augments your natural language prompt by retrieving content from your specified vector store using semantic similarity search. This reduces hallucinations by using your specific and up-to-date content and provides more relevant natural language responses to your prompts.

Select AI automates the Retrieval Augmented Generation (RAG) process. This technique retrieves data from enterprise sources using AI vector search and augments user prompts for your specified large language model (LLM). By leveraging information from enterprise data stores, RAG reduces hallucinations and generates grounded responses.

RAG uses AI vector search on a vector index to find semantically similar data for the specified question. Vector store processes vector embeddings, which are mathematical representations of various data points like text, images, and audio. These embeddings capture the meaning of the data, enabling efficient processing and analysis. For more details on vector embeddings and AI vector search, see Overview of AI Vector Search.

Select AI integrates with AI vector search available in Oracle Autonomous AI Database 26ai for similarity search using vector embeddings.

Topics

- Build your Vector Store

- Use DBMS_CLOUD_AI to Create and Manage Vector Indexes

Use theDBMS_CLOUD_AIpackage to create and manage vector indexes and configure vector database JSON parameters. - Use In-database Transformer Models

Select AI RAG enables you to use pretrained ONNX transformer models that are imported into your database in Oracle AI Database 26ai instance for generating embedding vectors from document chunks and user prompts. - Benefits of Select AI RAG

Simplify querying, enhance response accuracy with current data, and gain transparency by reviewing sources used by the LLM.

Parent topic: Select AI Features

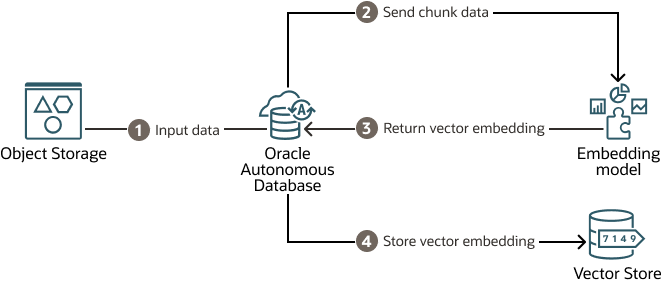

Build your Vector Store

Select AI automatically processes documents to chunks, generates embeddings, stores them in the specified vector store, and updates the vector index as new data arrives.

- Input: Data is initially stored in an Object Storage.

- Oracle Autonomous Database retrieves the input data or the document, chunks it and sends the chunks to an embedding model.

- The embedding model processes the chunk data and returns vector embeddings.

- The vector embeddings are then stored in a vector store for use with RAG. As content is added, the vector index is automatically updated.

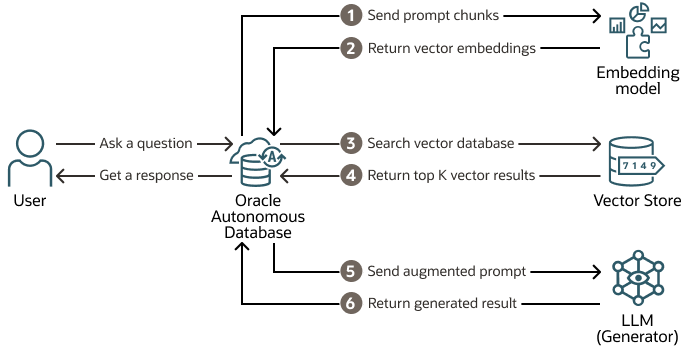

RAG retrieves relevant pieces of information from the enterprise database to answer a user's question. This information is provided to the specified large language model along with the user prompt. Select AI uses this additional enterprise information to enhance the prompt, improving the LLM's response. RAG can enhance response quality with update-to-date enterprise information from the vector store.

-

Input: User asks a question (specifies a prompt) using Select AI

narrateaction. -

Select AI generates vector embeddings of the prompt using the embedding model specified in the AI profile.

-

The vector search index uses the vector embedding of the question to find matching content from the customer's enterprise data (searching the vector store) which has been indexed.

- The vector search returns top K texts similar to the input to your Autonomous AI Database instance.

- Autonomous AI Database then sends these top K query results with user question to the LLM.

- The LLM returns its response to your Autonomous AI Database instance.

- Autonomous AI Database Select AI provides the response to the user.

Parent topic: Select AI with Retrieval Augmented Generation (RAG)

Use DBMS_CLOUD_AI to Create and Manage Vector Indexes

Use the DBMS_CLOUD_AI package

to create and manage vector indexes and configure vector database JSON parameters.

If you do not want table data

or vector search documents to be sent to an LLM, a user with administrator

privileges can disable such access for all users of the given database. This, in

effect, disables the narrate action for RAG.

You can configure AI profiles for providers listed in Select your AI Provider and LLMs through the DBMS_CLOUD_AI

package.

See Also:

-

Create a vector index: CREATE_VECTOR_INDEX Procedure.

- Manage vector index profiles and other AI profiles: Summary of DBMS_CLOUD_AI Subprograms

- Query vector index views: DBMS_CLOUD_AI Views.

Parent topic: Select AI with Retrieval Augmented Generation (RAG)

Use In-database Transformer Models

Select AI RAG enables you to use pretrained ONNX transformer models that are imported into your database in Oracle AI Database 26ai instance for generating embedding vectors from document chunks and user prompts.

You must import a pretrained ONNX-format transformer model into Oracle AI Database 26ai instance to use Select AI RAG with imported in-database transformer model. You can also use other transformer models from supported AI providers.

See Example: Select AI with In-database Transformer Models to explore the feature.

Parent topic: Select AI with Retrieval Augmented Generation (RAG)

Benefits of Select AI RAG

Simplify querying, enhance response accuracy with current data, and gain transparency by reviewing sources used by the LLM.

-

Simplify data querying and increase response accuracy: Enable users to query enterprise data using natural language and provide LLMs with detailed context from enterprise data to generate more accurate and relevant responses, reducing instances of LLM hallucinations.

-

Up-to-date information: Provide LLMs access to current enterprise information using vector stores, eliminating the need for costly, time-consuming fine-tuning of LLMs trained on static data sets.

-

Seamless integration: Integrate with Oracle AI Vector Search for streamlined data handling and enhanced performance.

-

Automated data orchestration: Automate orchestration steps with a fully managed Vector Index pipeline, ensuring efficient processing of new data.

-

Understandable contextual results: Has access and retrieves the sources used by the LLM from vector stores, ensuring transparency and confidence in results. Views and extracts data in natural language text or JSON format for easier integration and application development.

Parent topic: Select AI with Retrieval Augmented Generation (RAG)